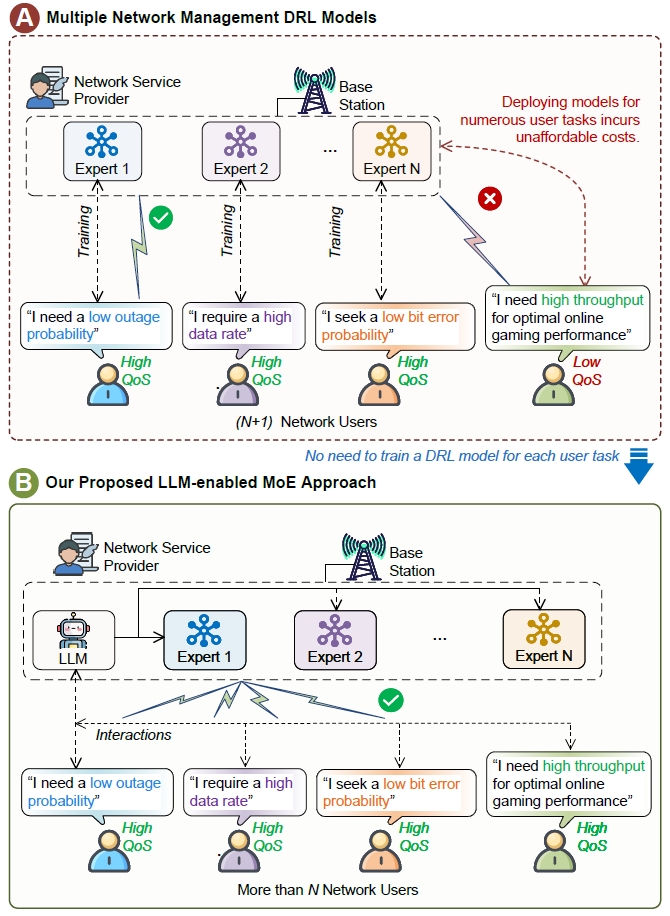

The following figure shows two network optimization strategies. Part A demonstrates the drawbacks of training distinct AI models for different user requirements, emphasizing the costs of excessive AI model deployment. Part B presents our LLM-enabled MoE approach, using a limited set of DRL models to efficiently address a variety of user tasks.

The problem we solve: How can we achieve effective network optimization without using numerous DRL models individually trained for each specific task?

🔧 Environment Setup

To establish a new conda environment, run the following command:

conda create --name moeopt python==3.7⚡ Activate Environment

Activate the environment with:

conda activate moeopt📦 Install Required Packages

Install the necessary packages one by one using pip:

pip install torch

pip install opencv-python==4.1.2.30

pip install scipy

pip install torchvision

pip install scikit-image🏃♀️ Run the Program

Execute main.py to initiate the program.

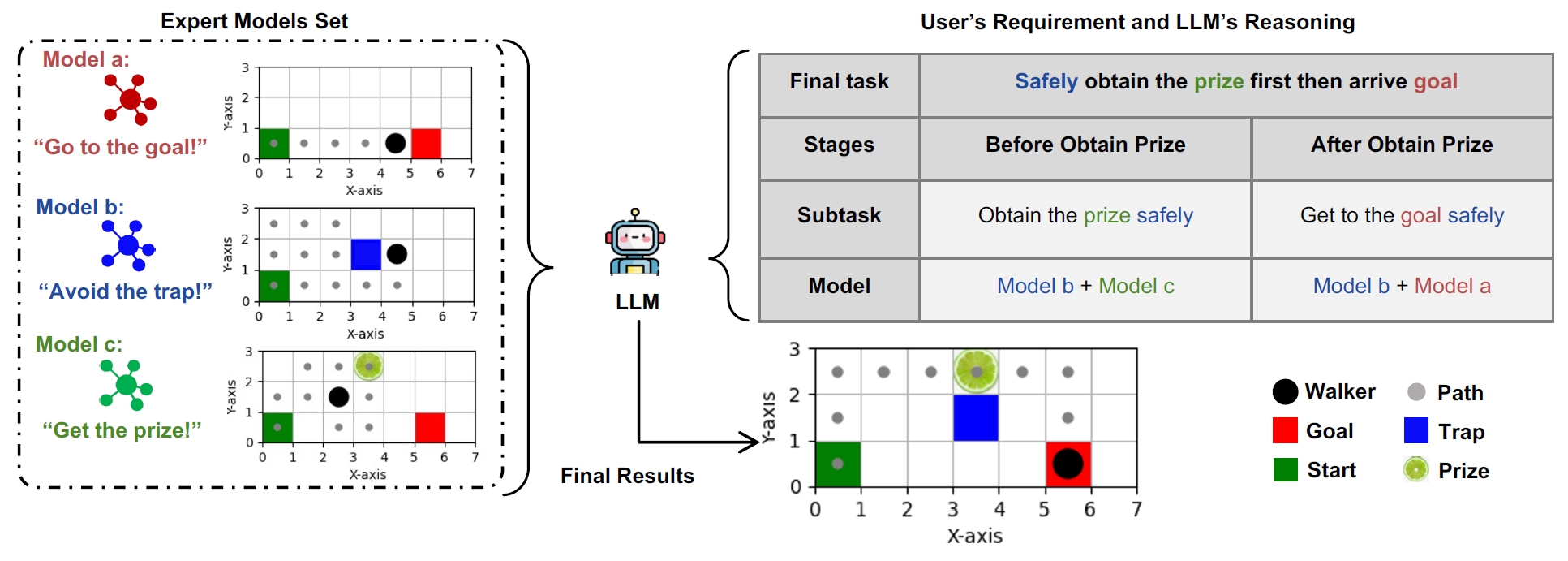

🔍 Case Study 1: Maze Navigation Task

LLM-enabled MoE framework demonstrated in a maze navigation task, using an ensemble of DRL models for diverse tasks as expert models for the LLM to infer and address user tasks.

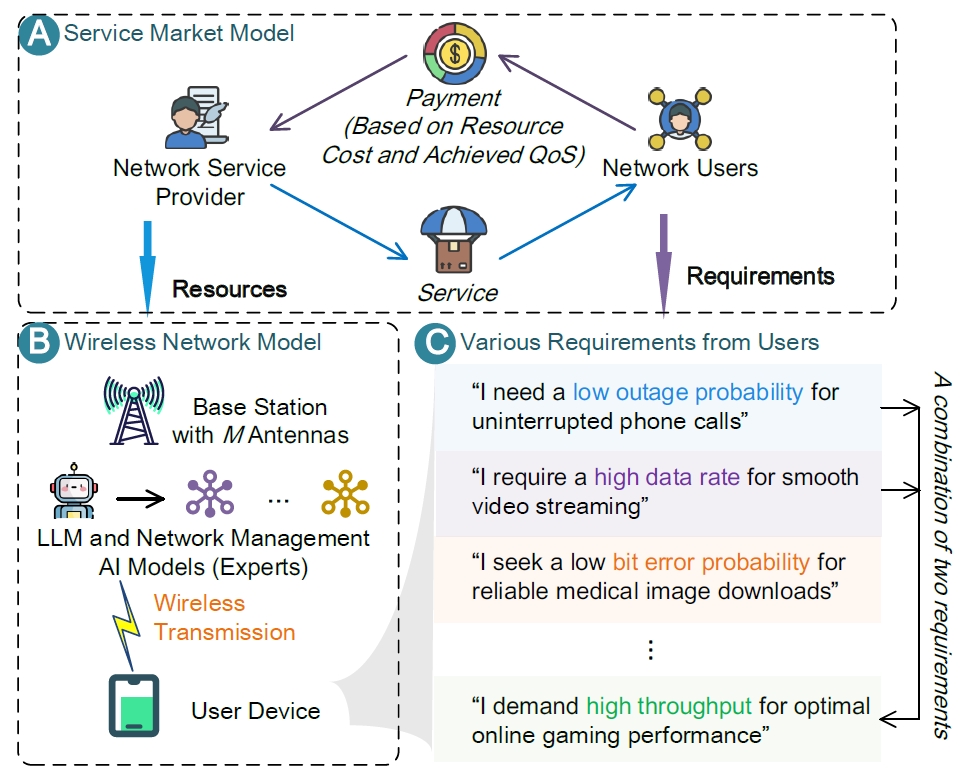

🔍 Case Study 2: Service Provider Utility Maximization Problem

Illustration of the system model, market interactions, and the impact of varying user requirements on service payments and power allocation strategies.

📚 Cite Our Work

If our code aids your research, please cite our work:

@article{du2024mixture,

title={Mixture of Experts for Network Optimization: A Large Language Model-enabled Approach},

author={Du, Hongyang and Liu, Guangyuan and Lin, Yijing and Niyato, Dusit and Kang, Jiawen and Xiong, Zehui and Kim, Dong In},

journal={arXiv preprint arXiv:2402.09756},

year={2024},

month = {Feb.}

}